Text-to-image AI

The most significant new disruptor of photography is now charging straight at us. It is text-to-image artificial intelligence: T2I AI. It generates images in response to text input.

I was fortunate to receive sponsorship from OPEN-AI that allowed me to practice the new art of engineering prompts (more on this below).

I used these images as the start of a visual journey: I would take one and explore the hints and ideas it held, changed it, uploaded it for more variations or expanded the image or cloned from other images.

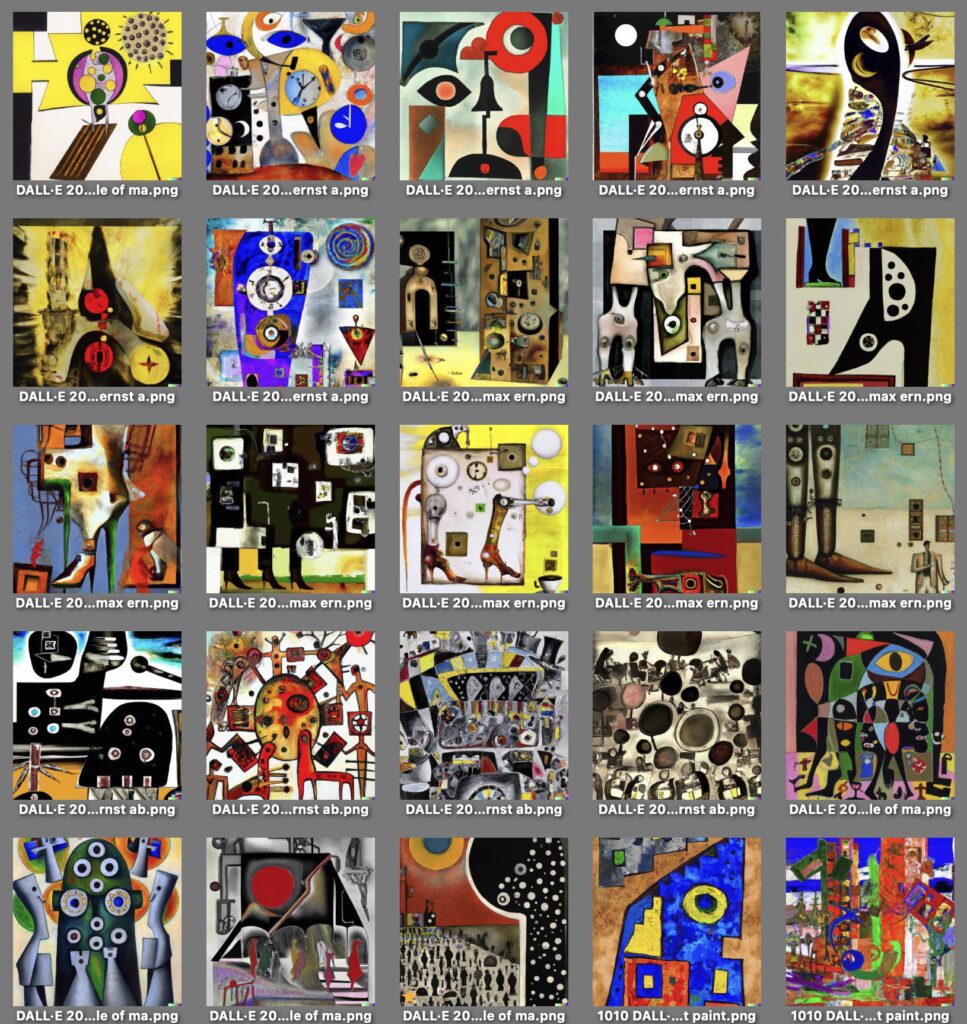

Here: a sample of images generated from a line of Emily Dickinson’s poetry in the style of various artists.

Prompt engineering

T2i AI (text-to-image artificial intelligence) systems such as DALL-E, Midjourney, Motionleap sit around waiting for the input of prompts to get to work. When they receive the text prompt that you send (from an online console or within an app) e.g. ‘a red rose floating on the surface of a lake with mountains in the background‘, they dig into their knowledge base to start constructing an image. It’s important to understand this process does not work by finding images in the database to match your prompt. In fact, there are no images in the database as such; there are generalised ‘notions’ of things. If AI had to construct images from existing images, it would take a great of computing. Instead, results usually come in a few seconds.

Imagine I ask you to paint a red rose. If you had first to find a suitable picture to copy, that would take you some time. Instead, as soon as you hear the words ‘a red rose’, some general notion of a red rose came to your mind. You could immediately start painting the rose. As you do so, you will be critical of what you’re doing: it may not look right, so you might scrub it and start again, this time the picture will look closer to your idea of a red rose and if you’re not an experienced artists, you may need a few tries and with each try you get closer to what is acceptable. That is very much how T2I works.

There are two parts (they call them ‘neural networks’) to the AI: the generator i.e. the bit that makes the image, and the discriminator, the bit that judges how well the ‘painting’ matches your prompt. So the generator will offer various examples of ‘rose’ building on its ‘knowledge’ of roses, having been trained on dozens of images of roses to ‘learn’ the general characteristics that also separate them from other flowers like dandelions, lilies or orchids. The discriminator then compares what the generator delivers with the prompt, asking for new options if not ‘satisfied’; or else it accepts the image and sends it to you, the prompter.

Now, what makes AI smart is that modern systems ‘know’ how things should look even if you’ve not been specific. For example, a rose floating on water will be reflected in it: the image will show a reflection even if you don’t specifically ask for reflections. In fact, if you do ask for a reflection, the words you use will push the next part of the prompt towards that back, and that could mean that part of the prompt is given lower prominence or even ignored. This can cause errors because, in fact, having reflections can be taken for granted but while mountains in the background is important to you, it is in fact an optional featuree. So a prompt asking for reflections and mountains may deliver the reflection but no mountains (see left image below).

There’s a whole lot more to writing prompts or seeds and different systems respond differently e.g. to spelling mistakes. Thus arises a fancy new skill ‘prompt engineering’. Furthermore, much depends on how well trained the AI is. I found, for instance, that DALL-E ‘knows’ the difference between the painting styles of Sonia Delaunay and Robert Delaunay. Some systems can’t distinguish between digital art and cartoon. We’re just at the beginning of learning what they can and cannot do. You see below two attempts at ‘a red rose floating on the surface of a rippling lake with fujiyama in the background’ from DALL-E and from DeepAI. Neither is better than the other; you could say they differ stylistically.

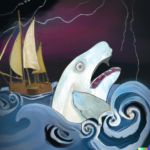

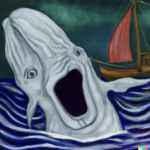

Sometimes you can obtain astonishingly inventive results. Like so many other systems, if you give the right instructions you get results that can range from pleasing to amazing. My daughter – a senior figure in publishing – asked for a briefing illustration for their cover designer. The book was a reprint of ‘Moby Dick’. The first results were predictable but boring until her husband suggested we ask for an image in the style of Edvard Munch. You can guess which images arose from that prompt.

Beginnings

The first step of the T2I journey is often decided even before you know it, because you already have an idea what you want the AI to make for you. A painting by Boucher, a super-hero in cartoon style, a photo-real panda sun-bathing by a pool?

The limit is only within your imagination. I started tentatively. Here is the first image – amongst the very first dozen – that quickened my pulse. The prompt was ‘ukiyo-e landscape with stream in foreground and stormy sky at sunrise milky way with flock of sheep in foreground’. I’m not sure about the ukiyo-e aspect, although there are stylistic hints such as the rendering of the foreground, but this is close (if not better) than what I had in mind. I like, especially, the beaded stream and the misty atmosphere.

49 Syllables on Life

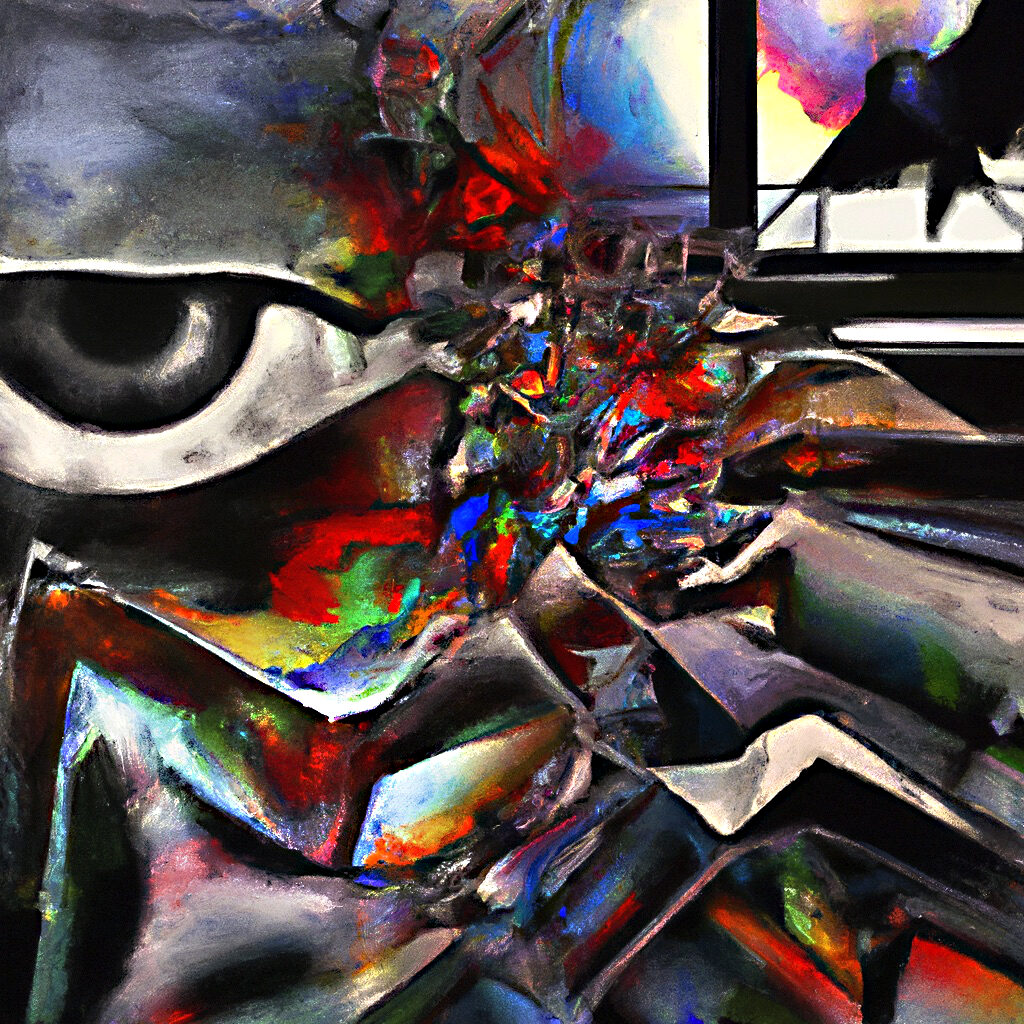

Before long, it was time to try what was always in my mind to do: prompt with poetry. I started with my own poetry. I was intrigued to learn how the AI would handle obscure and difficult language such as ‘In the space that lies between empty and nothing the pain of disunion leads to life’s first intake of breath that will rouse the stars to crochet with the strands of time but leave holes for light to return safe home‘. You see here one generation to that prompt, asking for digital art in the style of Cubist art.

The results were most entertaining, sometimes mesmerizing, sometimes surprising and sometimes plain shot past the target. Huge fun: it was like working with an erratic assistant tasked with sketching ideas or digging up anything that looks vaguely relevant.

I selected and manipulated 49 images to make my first NFT book 49 Syllables on Life available (soon) from Published NFT.

Lady of the Lake

It’s a good thing how something started is of little importance, because I have no idea where the idea for a geisha and lake came from. With various prompts referring to a geisha standing by a lake the AI-generated images stimulated a narrative. This told of her waking to find herself alone. But a note left on the pillow next to her says “I have to go. You do not have to wait.” But she waits. And wait, and waits … through storms, long nights and bitter cold. With this simple story line, I could direct the T2I to specific visual goals.

I added layers of movement to selected and worked images using Motionleap to help the images come to life. These have been collected as phygital works: a beautiful dye-diffused metal print plus NFT of the animated GIF.

See clubrare.xyz for the drop.

Faithful Warrior

Thanks to the encouragement of Eva Otilda-Nevraj, then of ClubRare, I started work on another series, with another fantasy narrative. This time, the purpose was explicitly to deliver images that could carry a story, almost to illustrate one. The story was that of a faithful Samurai warrior who watches helplessly as his Master is abducted by aliens. The Warrior swears to save his Master, setting him on a journey across the world and to galactic systems unknown to humanity.

While working intensively at this – generating hundreds of images a week – I found the AI seemed sometimes to be thinking ahead of me, and delivering surprising turns to the imagery.

Heart

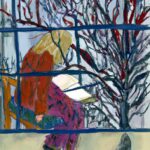

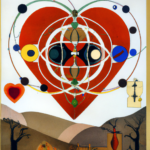

It was time to use a better quality of poetry than mine. I turned to Emily Dickinson (my copy of her complete works is over 50 years old) and decided on this:

Heart, we will forget him!

You and I, tonight!

You may forget the warmth he gave,

I will forget the light.

I chose this because it brings together universal themes of love and yearning around the central metonym of the heart. Using this as a prompt and asking for paintings in the style of artists as varied as Malevich, Mondrian, Rivera, Klee and Klimt gave me the most fun I’ve had in a long, long time.

The most surprising result was to ask for a blend of styles. Opposite is the result of blending Max Ernst with Hilma af Klint: you can see stylistic elements typical of both artists in the image. Amazing!

Nonsense

There’s something deliciously naughty about the idea of prompting a sophisticated artificial intelligence machine bristling with more than 12 billion data-points with a line of nonsense poetry. Yet it leads to surprisingly interesting, coherent images.

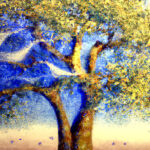

So far, the best results have come from Lewis Carroll’s inimitable Jabberwocky. I asked for a painting in the style of Kandinsky blended with Hilma af Klint oil painting of ‘Twas brillig, and the slithy toves Did gyre and gimble in the wabe‘. It easy to see the Kandinsky flourishes, but also the colour palette and tendency towards circular forms of Hilma af Klint in this image.

If you’re familiar with the poem, you’ll find it easy to imagine how the creatures in this selection fit into Carroll’s fantastical little drama.